Our whole life is immersed in uncertainty: nothing, apart from that, can be stated with certainty.

Bruno De Finetti

If our only certainty is uncertainty, then, in the words of the mathematical statistician Bruno De Finetti, there is no way around the study of uncertainty and its nuances. On the contrary, perhaps it is necessary to question everything that in everyday life has a semblance of certainty and to focus one’s attention on those aspects of apparently known phenomena that we do not really know.

Coming to terms with an uncertain world has become inevitable, especially at a time like the one we are living in when we live with major events and transformations dominated by uncertainty, such as pandemics or climate change, which test our ability to deal with the uncertain and to manage it. Thus, the tale of uncertainty becomes a fundamental tool for understanding the reality that surrounds us, and an opportunity to highlight the tools and skills that science introduces to understand it and manage it.

This intention produced the exhibition Incertezza. Interpretare il presente, prevedere il futuro (Uncertainty. Interpreting the present, predicting the future), curated by INFN, Vincenzo Barone, Fernando Ferroni, Vincenzo Napolano, and Antonella Varaschin, held at Palazzo delle Esposizioni in Rome from 12 October 2021 to 27 February 2022, as part of the wider project Tre stazioni per Arte-Scienza (Three stations for Art-Science).

The research work and experimentation with techniques and languages that this exhibition entails provides the starting point for constructing a tale of uncertainty, a reflection on several levels that passes through the observation of phenomena, images and objects on display, the use of multimedia and interactive content, and the words of scholars and researchers who deal with the notion of uncertainty on a daily basis.

Contents

2. Uncertainty and measurement

3. Uncertainty, chance, probability

What is uncertainty

The word “uncertainty” […] is deceptive because it seems to refer to a lack of certainty or an insufficient certainty; but certainty probably never exists and those who cannot get used to this idea condemn themselves to living a life of false certainty.

[E. Boncinelli1, A. Mira2 , L’incertezza è una fiera che non si può eliminare ma si può addomesticare (Uncertainty is a wild beast that cannot be killed but can be tamed) , in Incertezza. Interpretare il presente, prevedere il futuro, exhibition catalogue]

“Uncertainty” is a common word, which we think we can define in a shareable way; but a deeper understanding of its meaning can be sought in etymology: the word “certain” comes from the Latin certus and has the same root as “cernere”, which means to separate, to divide:

But what do you “separate” when you believe you have certainties? We say: “The true from the false, what is right from what is wrong’, but in reality we separate, i.e., we discern or divide, similar things that are not interchangeable. The sharper the judges, the more similar the things they can discern. The more imaginative and pragmatic they are, the more they can be trusted to judge the interchangeability of the parts. On the contrary, uncertainty is a state of mind and reality in which we do not provide type 0 (false, black) and 1 (true, white) evaluations, but we have at our disposal graduated probabilities, shades of grey, numbers between 0 and 1, which we assign to events to indicate their level of plausibility.

[E. Boncinelli1, A. Mira2 , L’incertezza è una fiera che non si può eliminare ma si può domesticare (Uncertainty is a wild beast that cannot be killed but can be tamed), in Incertezza. Interpretare il presente, prevedere il futuro, exhibition catalogue]

Not only is there no certain knowledge of reality – a fact that may be difficult to come to terms with – but uncertainty is in fact ineradicable; it can at best be reduced and “managed” by refining our ability to “grasp the nuances”, to measure what we observe and interpret what we measure. For this reason, uncertainty plays a crucial role for those involved in science: the most accurate and precise results cannot be achieved without a thorough knowledge of the limits of the analyses and instruments chosen. Scientific research works on a double track: building increasingly precise instruments for measuring and refining the ability to isolate data from their uncertainty, the signal from the noise.

If we focus first on the attitudes and practice of science, we have to observe that, contrary to the common perception of the general public, scientists do not manage certainty on the basis of what they consider infallible data, but on the contrary they constantly manage uncertainty, on the basis of theoretical hypotheses and data, which however tend to mix signal and noise. For a scientist, certainty is always replaced by a measure of the degree of conviction and silence does not exist, it is simply “white noise”

[S. Katsanevas3 , Suspiciendo Despicio, Despiciendo Suspicio, in Incertezza. Interpretare il presente, prevedere il futuro, exhibition catalogue]

The need to find the most correct measurements, to isolate relevant data and discern them from fluctuations related to chance or the measuring instrument, has led physicists to set strict statistical standards to determine when a new phenomenon can be said to have been observed with sufficient certainty – or rather, negligible uncertainty. Two recent results are particularly representative of uncertainty in experimental research: the measurement of the mass of the Higgs boson in 2012 by the ATLAS and CMS experiments at CERN in Geneva, which made it possible to talk about the discovery of the particle, and the measurement of the anomalous magnetic moment of the muon in 2021, for which, on the basis of current data, it is not possible to talk about a discovery, but we must wait and increase the data collected and the controls on possible instrumentation errors. Such far-reaching achievements must be researched and verified scrupulously, hence aware of the uncertainty they bring, and learning to understand, prevent, and reduce it:

Experiences such as those of the ATLAS and CMS experiments show that uncertainty is an intrinsic feature of experimental science, of its methods and its processes. […] Considerable caution is therefore required before announcing a scientific discovery, despite the very human temptation to give in to enthusiasm. And we must not forget that, although physics is popularly known for its great discoveries, it progresses thanks to the long, continuous, and painstaking work of taming uncertainty.

[A. Zoccoli4 , La logica della scoperta (The logic of discovery), in Incertezza. Interpretare il presente, prevedere il futuro, exhibition catalogue]

Uncertainty and measurement

Measuring generates information and information is essential for creating knowledge. Information can be understood as the partial solution to the problem of uncertainty, since it makes it possible to respond to questions relating to the essence of an entity and to the nature of its characteristics. Measuring is, therefore, essential in the scientific process of formulating and verifying a hypothesis. On the other hand, uncertainty is linked to situations in which information is imperfect or absent.

[D. Wiersma5, The importance of increasingly precise measurements, in Uncertainty. Interpreting the Present, Predicting the Future, exhibition catalogue]

Our observations of the world are always accompanied by an inevitable margin of uncertainty. Such uncertainty depends on the tools that we use, on random fluctuations, on the randomness of measurement conditions, and is intrinsic to the process of measurement and, therefore, impossible to eliminate.

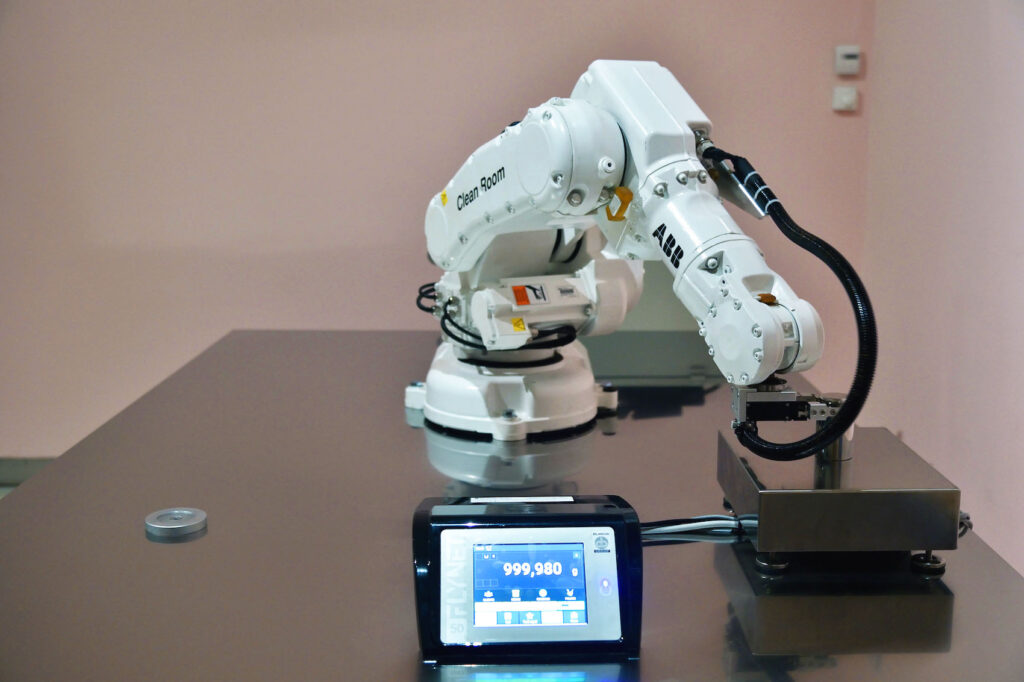

Even when the measuring process is automated and the error that, as human beings, we can make in measuring is thus reduced, uncertainty, is, in any case, present. A demonstration of this, in the show Incertezza, (Uncertainty) is an installation in which an automated mechanical arm repeatedly raises a one-kilogram metal weight and places it on a scale, recording, on a case-by-case basis, the result. Despite the fact that the measurements are repeated by the arm in an identical way and without changing either the weight or the scales, the measured mass is always slightly different.

The weight measuring process is always repeated in the same way, but the result varies slightly on a case-by-case basis.

The search for precision in measuring becomes even more important when it’s a question of stabilising the units of measurement, based on which we quantify physical quantities. The most widespread measuring system, which we also adopt, is the International System of Units (SI). Until a few years ago, the SI was based on seven base units of measurement: the kilogram for mass, the metre for distance, the second for time, the kelvin for temperature, the ampere for intensity of electric current, the mole for quantity of substance, the candela for light intensity. In Italy, the Istituto Nazionale di Ricerca Metrologica (INRiM, Italian National Institute of Metrology Research) ensures the production, maintenance, and development of the SI units of measurement. Since 2019, all the base units of measurement are no longer defined by means of physical samples, which could be subject to alterations over time, but by physical constants. The seven base units have, thus, been joined by seven constants, which, since they are physical constants, cannot depend on the reference sample, but were measured in the most accurate way possible. The last unit of measurement to be redefined was the kilogram: since 1889, its definition coincided with the mass of a platinum-iridium cylinder that constituted the international prototype of the kilogram. This cylinder could, however, slightly change over time due to the deposit of contaminants; since 2019, the kilogram has, instead, been defined based on the Planck constant.

Increasingly precise and reliable measurements are essential for industry, the environment, and health, but are also key to further penetrating the mysteries of the universe:

Scientists love uncertainty. They love it! By exploring the region of uncertainty, a scientist is able to discover new phenomena, verify or contest models, and always uncover new details to explain the world.

[D. Wiersma5, The importance of increasingly precise measurements, in Uncertainty. Interpreting the Present, Predicting the Future, exhibition catalogue]

Although it cannot be eliminated, experimental uncertainty may, however, be reduced, and scientific research is also moving in this direction: increasingly precise measuring equipment may be decisive in making new discoveries. One example among many is gravitational waves, which were measured for the first time in 2015, one hundred years after they were predicted by Albert Einstein in his general theory of relativity.

In order to detect these almost imperceptible oscillations in spacetime, special measuring instruments, able to measure very small movements: gravitational wave interferometers, needed to be constructed. The observations of 2015 were made thanks to two interferometers of the LIGO experiment, installed in the USA (in Louisiana and the state of Washington respectively), and to the international collaboration in which Italy is participating with the Virgo experiment, installed in Cascina (in the province of Pisa).

The video shows the operation of a gravitational wave interferometer. The interferometer consists of two perpendicular arms that are several kilometres long, inside of which laser beams travel; having reached the ends, the two beams are reflected by two mirrors and are reunited, forming a particular image, called an interference figure. A change in the length of the arms, even a very small one such as that generated by gravitational waves – which deform spacetime as they pass through, lengthening one arm while shortening the other – causes a change in the distance of the mirrors and, thus, in the length of the laser’s path. As a result, the interference figure is also changed.

Even extremely precise instruments, however, cannot completely avoid uncertainty. To understand what we measure, we must refine our ability to analyse the data collected in the process of measuring in order to correctly interpret them, recognising and taking account of the sources of error that we cannot remove and also appealing to one of the most powerful mathematical tools for keeping “chance” in check: the theory of probability.

Uncertainty, chance, probability

What is probability? How can we define it? It may seem like a piece of cake, but try to do it yourself: it isn’t at all easy; everyone thinks they know what probability is, but we can’t define it.

[G. Parisi7, The value of uncertainty, in Uncertainty. Interpreting the Present, Predicting the Future, exhibition catalogue]

The theory of probability is now one of the mathematical tools that the natural sciences, and the social sciences too, use to describe reality and assess the uncertainty thereof. In any case, at its roots, there is the resolution of very distant problems, which concern games of chance. Some brilliant minds, like Blaise Pascal and Pierre de Fermat, were already interested in questions of this kind in the 1600s, but the first elements of the theory of probability are attributed to Girolamo Cardano who, in the mid-1500s, dedicated an essay to the dice game, a topic that Galileo Galilei would also spend some time on later.

The theory took on a more defined form in the following century, thanks to the work of Jackob Bernoulli and Pierre-Simone de Laplace, who were dedicated to the so-called “law of large numbers”; in the mid-1700s, the Bayes theorem on conditional probability was also formulated, i.e. the probability of an event conditioned by another event, still of fundamental importance for analysing the result of a clinical test, for example.

The true revolution came about, however, in the 1900s with Bruno de Finetti, who introduced a new idea of probability as a measure of our “reasonable” expectation that an event will occur. With this new definition, the calculation of probabilities increasingly proved to be a coherent and effective guide for reasoning and acting in conditions of uncertainty.

For some quantities, such as the W boson mass, the error bars progressively reduced over time thanks to the development of increasingly precise measurement instruments and procedures.

A very clear way to visualise the uncertainty of a measurement and its link with probability is the bars of error that can be traced around the measured value. Thanks to random or systematic errors or genuine limits, in fact, each value that is the fruit of a direct or indirect measurement does not precisely correspond to the “true” value of the measured quantity. For this reason, the measured value is always associated with a range of values within which one expects, with a certain probability, that the “true” value of the quantity is found. This range is represented graphically by the bars of error.

To reduce uncertainty, one tries to minimise errors during measurements and to always improve measuring instruments and techniques. These graphs show the evolution of the bars of error over time around the value of some quantities: the smaller the bars are, the closer we get to knowing the “true” value.

After having said a sequence of 0s and 1s into the microphone, an algorithm calculates Shannon’s entropy, a parameter that estimates the unpredictability, the randomness of a sequence of values.

In parallel with the progress in the precision of instruments and the accuracy of measurements, 20th-century mathematics developed and refined its capacity to interpret uncertainty, but not just that: today we are able to describe and quantify the unpredictability of a process. The possibility of estimating chance is crucial for processes that are based on sequences of random values, like the cryptography of data. A useful parameter in this sense is Shannon’s entropy, introduced in 1984 by the mathematician Claude Shannon. The more random the sequence of symbols is, the larger the value of the parameter. Since our brain tends to involuntarily recreate sequences that contain elements of order and regularity, despite our efforts, we cannot generate a totally random sequence. Simulating perfect randomness is impossible, even for the most powerful computers. An installation created for the “Incertezza” (Uncertainty) exhibition makes it possible to estimate how close we can get to total randomness, by calculating Shannon’s entropy for a sequence of 0s and 1s spoken into a microphone.

Although the theory of probability is a key resource for interpreting and knowing the world through understanding uncertainty, progress in knowledge of the last century has confronted us with the fact that, for some phenomena in particular, uncertainty is an element that cannot be eliminated. The quantum world and chaotic phenomenaforce us to get to grips with a form of uncertainty that no longer depends on our instruments or the number of measurements that we manage to make, but is an intrinsic piece of data in the structure of reality.

1Edoardo Boncinelli, Physicist and geneticist, Vita-Salute San Raffaele University

2Antonietta Mira, Statistician, Università della Svizzera Italiana

3Stavros Katsanevas, Physicist, EGO European Gravitational Observatory, Université Paris Diderot

4Antonio Zoccoli, Physicist, National Institute for Nuclear Physics (INFN), University of Bologna

5Diederik Wiersma, Physicist, Istituto Nazionale di Ricerca Metrologica (INRiM, National Institute of Metrology Research), LENS European Laboratory for Non-linear Spectroscopy, University of Florence

6 Fernando Ferroni, Physicist, Gran Sasso Science Institute (GSSI), National Institute for Nuclear Physics (INFN)

7Giorgio Parisi, Physicist, Accademia Nazionale dei Lincei, Sapienza University of Rome, National Institute for Nuclear Physics (INFN)